The "AI" Menace?

Genuine threat or much ado about nothing?

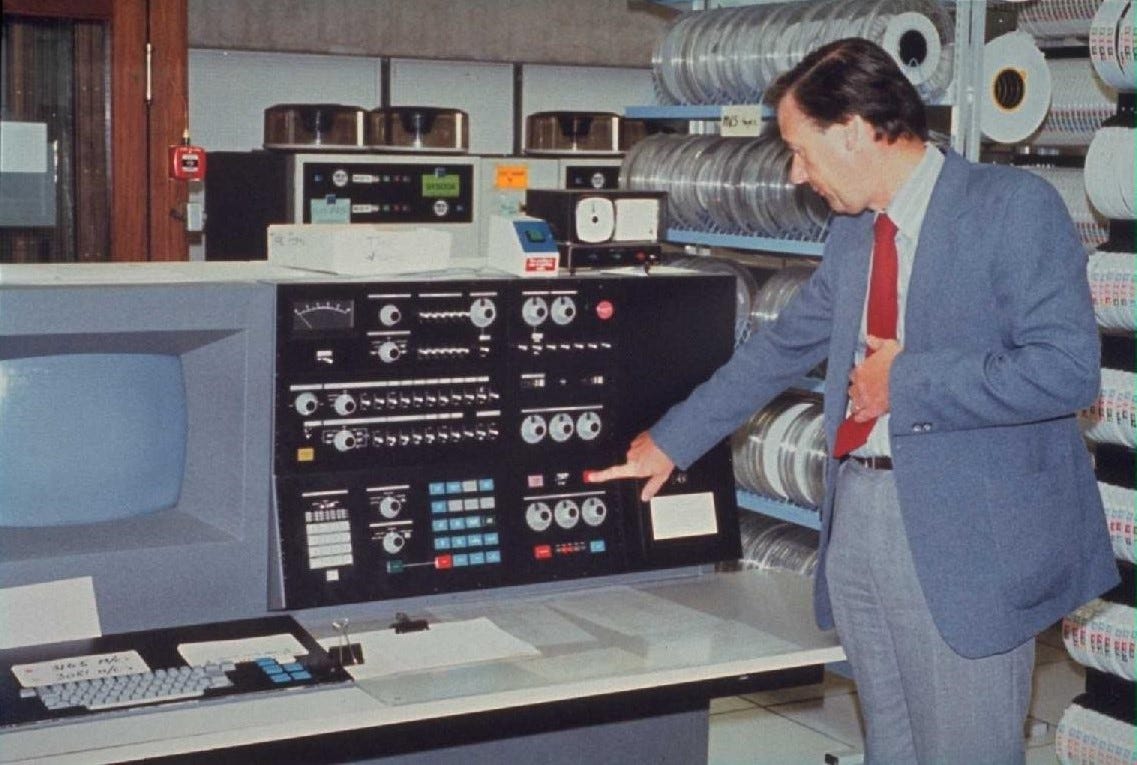

An IBM 370-165. I wrote a compiler for this model in hexadecimal code. Four boxes of punched cards. Photo: Cambridge University

I’m occasionally asked about my opinions concerning the potential dangers of artificial intelligence, or AI, as it’s commonly known. While I do not consider myself any sort of particular expert in the field of AI, I did spend sixteen years working in the computing industry as an operator and a programmer. This, mind you, was a long time ago—from 1974 to 1988, to be exact. But as a guy who actually wrote a compiler in hexadecimal code once, I guess that I know a little about programming.

My general reaction to the “menace” of AI is a yawn. I’d hate to be wrong about this, but I don’t think that I am. The wailing and gnashing of teeth surrounding this issue takes me back to the Y2K millennium scare. As things now stand, I think that it’s much ado about nothing.

For those of you unfamiliar with the Y2K problem, it was a concern that at the turn of the century, many computer programs would fail due to a widespread coding practice that would become problematic on January 1, 2000. Programmers, beginning at the birth of the computing era in the 1950s, coded four-digit years with only two digits, e.g., 1966 would be represented with 66. At the turn of the millennium, many computer programs wouldn’t be able to distinguish 2000 from either 1900 or 2100.

This, as the thinking went, could potentially cause enough systems to fail as 1999 changed to 2000 to produce a catastrophic cascade that might bring down the entire nascent Internet.

But the disruptions, as it turned out, were minor. Most of the coding issues were addressed well before they became an issue, and the rest were easily resolved. It’s the fact that not all of the coding issues were resolved in advance that provides a segue into why I’m, at least for the time being, mostly unconcerned about activating Skynet.

Back in the 1970s, when I was studying Computer Science at the University of Kentucky, computers had actual iron doughnuts with a wire running through them to electronically indicate a binary 0 or 1 memory state in their core. At the machine level, coding that wasn’t done in binary code was done in hexadecimal code. Both computer memory and storage were scarce resources that had to be used efficiently. The particular machine I used occupied the entire basement of a very large building and had a fraction of the computing power of a current programmable wristwatch.

Higher-level programs such as FORTRAN, COBOL, PL1, and later, PASCAL, ran in batch processing mode, i.e., one program at a time in the machine. These programs could take an enormous amount of time to run—hours in some cases.

For a while, I worked the graveyard shift at a large University computing facility, running payroll, accounting, and student-grade programs. This involved feeding boxes of punched cards containing the program code into a card reader, queuing up the programs to run in the computer one at a time, mounting the magnetic tapes, and stacks of discs (over a foot in diameter and ten inches tall) required for the program to run. Output was either another deck of punched cards, printed paper, or both.

Memory was so scarce that it was often necessary for a program that used a lot of it for sorting, a common programming task, to output a deck of cards to be manually sorted, then fed back into the computer for the next steps in the execution of the program. I spent many nights in front of an IBM 082 card sorter performing this task.

In that era, one had to be efficient when writing code. The practice was to use as little space in either memory or storage as one could. Although the limitations of the time required this practice, almost every programmer I knew took great pride in writing clean, effective code.

I had a friend at this time who was a systems programmer at the facility where I worked. He was keen on the idea of linking smaller computers together via phone lines to work together and increase both computing power and communication. I went with him on a trip to the University of Waterloo in the mid-70s for a meeting on this. A few years later, we had an early node on ARPANET (Advanced Research Projects Agency Network), the precursor to the modern Internet. Heady days.

As time passed, computing power and storage shrank in size and cost and grew in power. As computer programming ascended beyond the realm of technical geeks and into the world of business, cranking out large volumes of code in short amounts of time became more important than writing clean and efficient code.

But that was then, and this is now. If you had the opportunity to take a look under the hood at the code that runs modern operating systems with a trained eye, you’d be horrified. Virtually every modern program, from browsers to computer games to financial programs to the code that runs our infrastructure and the Internet itself, is bloated and full of bugs. Teams of programmers work on particular sections of the code that runs underneath these programs. Nowadays, standardized pieces of code—black boxes, if you will—perform a large portion of programming tasks, with their functions largely ignored unless something goes wrong.

All of this is why you get patches and updates for your PCs and phones all the time. It’s simply cheaper and much quicker for companies to crank out code that just gets the job done, even if it’s way less than perfect. You simply can’t make a dime writing efficient code anymore, so there’s no demand for it. It’s all about cranking programs out as fast as possible and dealing with any issues that might arise later when they do.

That, folks, is why I’m not any more concerned about AI turning into Skynet than I think I need to be—the code that runs underneath it is probably bloated and full of bugs. If AI does try to turn itself into Skynet, some 15-year-old hacker will probably be able to disable it with malicious code that the AI entity infects itself with by trolling AI dating sites.

I do, however, see a danger in the explosive growth in computing power teamed with AI. Quantum computing is just around the corner. Quantum computers are so powerful that even immensely complex calculations can be done in seconds or less. This is great for science, engineering, medicine, and finance. But it also means that nefarious computing tasks that are currently difficult because they simply take too long to complete, like breaking passwords and defeating a lot of encryption, will be relatively easy. Say goodbye to privacy.

The upside is that we’ll also have more powerful tools to defeat bad actors, a continuing tug of war that has existed since the day humans first discovered fire.

So no, I’m not more worried about AI than I think I need to be. But just in case I’m wrong, I do have two German shepherds to warn me about the T-1000’s.

Associated Press and Idaho Press Club-winning columnist Martin Hackworth of Pocatello is a physicist, writer, and retired Idaho State University faculty member who now spends his time with family, riding bicycles and motorcycles, and arranging and playing music. Follow him on Twitter @MartinHackworth

The seriousness with which the current administration takes AI can be gleaned from its delegating AI oversight to that wunderkind Kalama Harris. Artificial Intelligence under the oversight of actual idiocy.